This blog was previously published in Datacenter Dynamics on Oct. 18, 2019.

This blog was previously published in Datacenter Dynamics on Oct. 18, 2019.

Till now, the lines of demarcation influencing the design and role of a data center were, at least somewhat, clear and consistent. The local service area tended to reach out about 150 miles, give or take. Inside the data center, resources such as compute and storage capacity, as well as the links connecting the data center to the access network, were designed based on a predictable traffic load.

This is especially true within a multi-tenant data center (MTDC) where physical location is often dictated by the latency requirements of its tenants. An MTDC that is near to a stock exchange, for example, has a high value to firms that require low latency access to that exchange. The location of the users typically defines the network’s edge while the data center’s location is dictated more by latency. Much of this is now changing.

Contraction at the Edge

Deployments of 5G and IoT are beginning to ramp up, enabling a new order of applications requiring ultra-reliable, low-latency (URLL) performance. One effect is the shrinking of the traditional local service area, which brings the data center ever closer to the network edge. Today, the lines—and even the roles—separating the two are starting to blur.

Of course, this trend is not entirely new. For years, content providers have deployed more and more resources closer to their subscribers to support content caching for improved savings and reduced latency. Now, however, other types of networks are finding strong use cases and are doing the same. While the MTDCs must find a way to reposition themselves, the carrier networks—many of whom have struggled with falling ARPU (average revenue per user)—are finding new opportunities in the increased Edge-based activity.

Ultra-reliable, low latency becomes a capacity issue

Driving the concept further are IoT machine-to-machine latency requirements and the deluge of data that billions of connected devices will produce. In other words, increasing capacity is critical. Yet, there is a limit to how much fiber can be deployed, so operators must look at other methods for adding bandwidth. Wavelength division multiplexing (WDM) may be one piece of the puzzle, another is to continue to reduce the distance the data must travel.

There are a number of strategies for shortening the data path. From a network design perspective, operators will need to continue to increase the amount of "east-west" (local) traffic, as opposed to relying on longer "north-south" hops back and forth between the data centers and the Edge. This will also require more parallel links to meet the higher reliability requirements.

The bottom line is that networks will need to keeping building out their Edge-based resources in order to consume more data locally. This not only enables them to meet the URLL demands, it can become an effective strategy for conserving bandwidth.

Cloud integration and effects in the data center

All this will affect the design and, to a certain extent, the role of MTDCs. As the network service area contracts, the resources deployed at the Edge will be much better equipped to handle the performance demands than the traditional (regional) MTDC solutions. In addition, requirements for lower cost, smaller footprint and smaller service areas will further challenge the existing MTDC business models.

The cloud will play a key role as carriers and content services providers look to adapt to this new environment. CSPs will gravitate to large cloud deployments while smaller cloud instances dominate at the Edge. The major challenge will be extending a distributive distributed cloud structure across many geographic locations while maintaining providing service automation and maintaining security control.

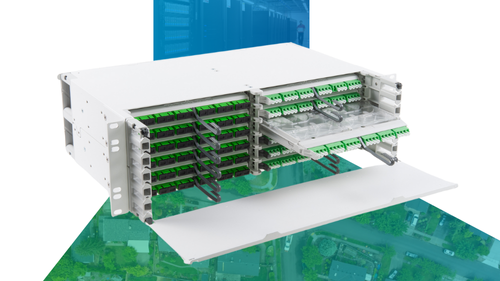

While the types of data traffic being shuttled between the Edge and the data centers at the core will change, it should have little effect on the existing software and control systems. The infrastructure, especially the amount of fiber, will absolutely need to change. Edge-to-core traffic will drive the need for more Ethernet. Fiber will be the key to success with evolving high-density fiber cable and apparatus solutions emerging to meet these requirements.

There is also a role for long haul high capacity options, as well as the bandwidth-boosting effects of wavelength division multiplexing application like coarse wave division multiplexing (CWDM) and dense wave division multiplexing (DWDM).

It is important to note that these next gen networks will not be created from the ground up; operators will be adapting what they have, leading to hybrid multivendor systems featuring a mix of new and existing components. The infrastructure will be complex and getting right is a tall order. But if done well, it will lead to a more efficient and simplified network, able to grow and adapt to meet new and unimagined demands.